About MemMachine

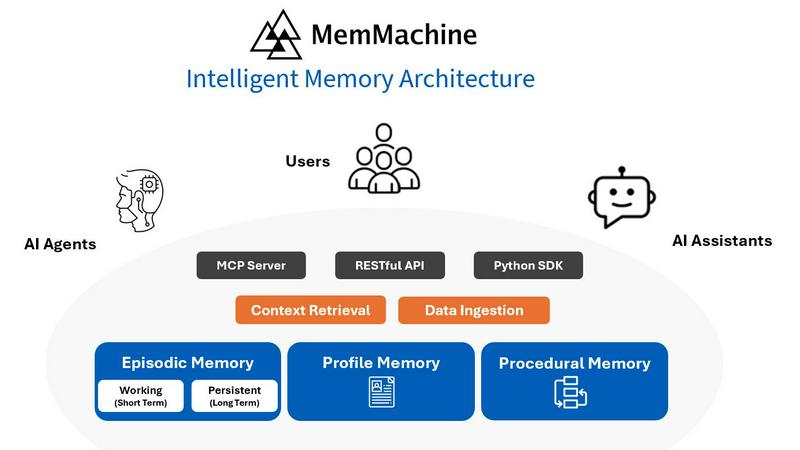

Imagine having a conversation with a friend who forgets everything you said the moment you walk away. That's what it's like using most AI applications today—they start from scratch every single time. MemMachine fixes this. It's an open-source memory layer, a powerful piece of software you add to your AI agents and applications to give them a lasting memory. Think of it as the brain's hippocampus for your AI, allowing it to learn, store, and recall information from past interactions. This transforms generic chatbots into intelligent, personalized assistants that understand context and grow smarter with each conversation. It's built for developers, engineers, and innovative teams who are creating the next generation of AI-powered tools, from customer support bots to personal health companions. The core value is simple: unlock true personalization and depth in AI interactions by letting your applications remember, creating a seamless and evolving relationship with every user.

Features of MemMachine

Persistent & Evolving Memory

This is the heart of MemMachine. It allows your AI application to maintain a continuous memory across different chat sessions, even if you switch between different AI models or agents. It builds a sophisticated, evolving profile of each user, remembering their preferences, past questions, and unique context. This means every new interaction is enriched with history, making the AI feel more understanding and personal, instead of starting over with a blank slate every time.

Multi-Platform & LLM Integration

MemMachine is designed to work seamlessly with the tools you already use. It integrates with popular AI platforms like OpenAI, AWS Bedrock, and local models like Ollama through its MCP (Model Context Protocol) server capability. This flexibility means you aren't locked into one vendor; you can build your agent with your preferred large language model and still give it the powerful memory layer that MemMachine provides, making it a versatile choice for any tech stack.

Flexible Deployment & Data Control

You have complete control over how and where you run MemMachine. You can install it locally on your own machine for development, deploy it in your private cloud for production applications, or simply install it via pip. This flexibility ensures you retain full ownership and control over your user's memory data, addressing critical privacy and security concerns. You decide the infrastructure, keeping sensitive information secure within your environment.

Open-Source with Strong Community

MemMachine is fully open-source, which means its code is transparent, auditable, and free to use. This is backed by comprehensive documentation to help you get started and an active, supportive community on platforms like Discord. Being open-source fosters innovation, allows for customization, and ensures the tool evolves with input from developers worldwide, giving you confidence in its longevity and support.

Use Cases of MemMachine

Personalized Healthcare Assistants

Transform patient support by creating an AI that remembers individual medical histories, appointment preferences, and treatment plans. As shown in the example, an agent with memory can recall a patient's dislike for morning appointments and fasting requirements, proactively suggesting better options and providing empathetic, tailored care that builds trust and improves health outcomes.

Intelligent Customer Support Bots

Upgrade customer service chatbots from basic FAQ responders to knowledgeable support agents. MemMachine allows the bot to remember a customer's past issues, product preferences, and interaction history. This enables it to provide faster, more accurate solutions without asking the user to repeat information, dramatically improving customer satisfaction and efficiency.

Context-Aware Creative & Research Partners

Build AI assistants for writers, researchers, and developers that act as true long-term partners. The AI can store and retrieve your articles, research notes, code patterns, and creative preferences. Over time, it learns your blind spots and working style, offering challenges and insights tailored specifically to your evolving projects and goals.

Proactive Team Collaboration Agents

Power internal team AI tools that facilitate better collaboration. An agent powered by MemMachine can remember project contexts, team member responsibilities, past decisions, and ongoing tasks. It can proactively surface relevant information, automate follow-ups, and provide intelligent, context-aware insights that improve meeting efficiency and project flow.

Frequently Asked Questions

What exactly is a "memory layer"?

A memory layer is a software component that sits between your AI application (like a chatbot) and its brain (the Large Language Model). While the LLM generates responses, the memory layer is responsible for storing, organizing, and retrieving information from past conversations. MemMachine provides this layer, handling the complex tasks of saving user data, linking related concepts, and feeding the right historical context back to the AI model to inform its current response.

Is my data private and secure with MemMachine?

Absolutely. A core principle of MemMachine is giving you full data control. Since it's open-source and offers flexible deployment, you can run it entirely on your own infrastructure (locally or in your private cloud). This means all user memories and profiles are stored in databases you own and manage, never on MemMachine's servers. You maintain complete sovereignty over all sensitive information.

How difficult is it to integrate MemMachine into my existing app?

The process is designed to be developer-friendly. MemMachine can be installed via pip, and it connects to your application through its API. The documentation provides clear guides and examples. Its compatibility with multiple LLM platforms via MCP means it likely fits into your existing setup without needing to overhaul your entire architecture. You can start by adding basic memory recall and gradually implement more advanced features.

Can MemMachine work with AI models I run locally, like Ollama?

Yes, this is a key strength. MemMachine's MCP server capability is built specifically for this kind of integration. It allows the memory layer to communicate seamlessly with locally-hosted models such as Ollama, as well as major cloud providers. This lets you build powerful, personalized AI agents that are both private (using local models) and context-aware (using MemMachine), all without relying on external API services.

You may also like:

Reputacion

The all-in-one platform to manage, collect, and boost your Google reviews. Automate your online reputation starting at 19€/month

Morningscore

The worlds only complete SEO & GEO tool in one simple plan for $69/mo. No add-ons required. SEO automation included.