Agenta

Agenta is an open-source platform that helps teams build and manage reliable AI apps together.

Visit

About Agenta

Agenta is your friendly, open-source platform designed to help teams build and ship reliable AI applications powered by large language models (LLMs). If you've ever felt frustrated by the unpredictable nature of LLMs, with prompts scattered everywhere and debugging feeling like guesswork, Agenta is here to help. It's built for the whole team—developers, product managers, and subject matter experts—to collaborate seamlessly. The platform acts as your single source of truth, centralizing the entire LLM development workflow. You can experiment with different prompts and models, run automated evaluations to replace gut feelings with hard evidence, and observe your live applications to quickly pinpoint issues. By bringing everyone together and providing the right tools, Agenta transforms chaotic, siloed processes into a structured, efficient practice known as LLMOps, helping you move from experimentation to production with confidence.

Features of Agenta

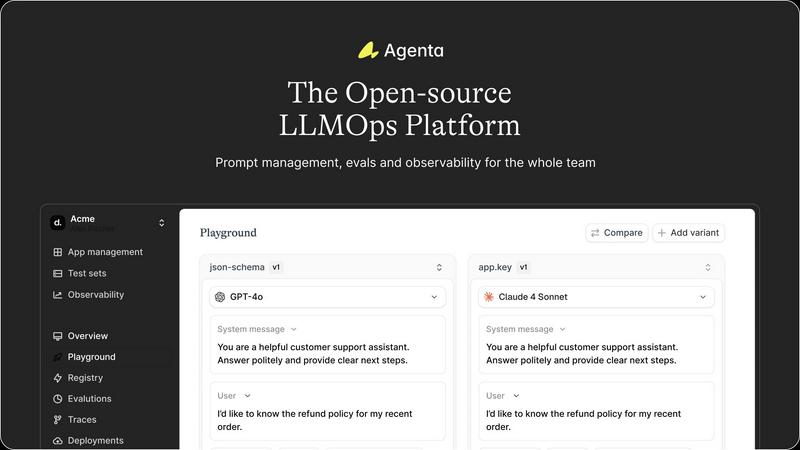

Unified Playground for Experimentation

Agenta provides a central playground where you can safely test and compare different prompts, models, and parameters side-by-side. This visual interface allows both technical and non-technical team members to experiment without writing code. You can instantly see how changes affect outputs, making prompt engineering a collaborative and iterative process. Found a problematic response in production? You can save it directly to a test set and debug it right in the playground, closing the feedback loop.

Automated and Flexible Evaluation

Replace guesswork with a systematic evaluation process. Agenta lets you set up automated tests using a variety of methods. You can use an LLM-as-a-judge, choose from built-in evaluators, or write your own custom evaluation code. Crucially, you can evaluate the full trace of an AI agent's reasoning, not just the final output, giving you deep insights into where things succeed or fail. This ensures every change you make is validated before it affects your users.

Comprehensive Observability & Tracing

Gain full visibility into your LLM applications in production. Agenta traces every single request, allowing you to see the exact chain of steps and pinpoint where failures occur. You can annotate these traces with your team or collect feedback from end-users. The best part? With one click, you can turn any problematic trace into a permanent test case for future experiments, ensuring the same error doesn't happen twice.

Collaborative Workflow for Teams

Agenta breaks down silos by providing a unified platform for your entire team. Domain experts can edit and test prompts through an intuitive UI without needing engineering help. Product managers can run evaluations and compare experiment results visually. Meanwhile, developers have full API access, ensuring parity between UI and programmatic workflows. This brings everyone into one cohesive development cycle, from idea to deployment.

Use Cases of Agenta

Developing and Refining Customer Support Chatbots

Teams building AI-powered support agents use Agenta to manage hundreds of prompt variations for different query types. Subject matter experts can directly tweak responses for accuracy and tone in the playground, while automated evaluations check for helpfulness and safety before any update is deployed. Observability tools then monitor live conversations to quickly catch and fix any confusing or incorrect answers.

Building Reliable Content Generation Systems

Marketing and content teams use Agenta to create systems that generate blog posts, product descriptions, or social media content. They can compare outputs from different models like GPT-4 and Claude in the playground, run evaluations for brand voice adherence and SEO quality, and use human evaluators to provide final approval. This creates a reliable, scalable content pipeline.

Creating Complex AI Agents and Workflows

When building multi-step AI agents that perform tasks like data analysis or research, debugging is complex. Agenta allows developers to evaluate each intermediate step in the agent's reasoning chain (the full trace), not just the final answer. This makes it possible to identify exactly which step in a complex workflow is failing, drastically reducing debugging time.

Academic and Research Experimentation

Researchers and students working with LLMs use Agenta to rigorously track their experiments. They can version-control every prompt and parameter change, run systematic evaluations to compare different approaches, and maintain a clear history of what was tried and what results it yielded. This brings reproducibility and structure to LLM research projects.

Frequently Asked Questions

Is Agenta really open-source?

Yes, absolutely! Agenta is fully open-source. You can view the complete source code on GitHub, contribute to the project, and self-host the platform on your own infrastructure. This gives you full control and flexibility, avoiding any vendor lock-in for your core LLM operations.

How does Agenta work with different AI models and frameworks?

Agenta is model-agnostic and framework-friendly. It seamlessly integrates with popular providers like OpenAI, Anthropic, and Cohere, as well as open-source models. It also works with AI development frameworks such as LangChain and LlamaIndex. You can use the best tool for each job without being tied to a single vendor.

Can non-technical team members really use Agenta?

Definitely! A key goal of Agenta is to empower every team member. Domain experts and product managers can use the intuitive web UI to experiment with prompts, run evaluations, and review results without writing a single line of code. This democratizes the LLM development process.

What is "LLM-as-a-judge" in evaluations?

"LLM-as-a-judge" is a powerful evaluation method where you use one LLM (like GPT-4) to critique or score the output of another LLM application. For example, you can ask the judge model to rate an answer for accuracy or helpfulness on a scale. Agenta has built-in tools to easily set up and run these automated, AI-powered evaluations at scale.

Pricing of Agenta

Agenta is an open-source platform, which means the core software is free to use forever. You can download, self-host, and modify it without any cost. The company also offers a cloud-hosted version for teams that prefer a managed service, and enterprise plans with additional features and support. For specific details on cloud subscription tiers, enterprise pricing, and a detailed comparison, please visit the official Agenta website or contact their sales team to book a demo.

You may also like:

Blueberry

Blueberry is a Mac app that combines your editor, terminal, and browser in one workspace. Connect Claude, Codex, or any model and it sees everything.

Anti Tempmail

Transparent email intelligence verification API for Product, Growth, and Risk teams

My Deepseek API

Affordable, Reliable, Flexible - Deepseek API for All Your Needs